This is a writeup from a series of talks and workshops I’ve given on this topic. So far:

- Part 1 described the least you can do: not estimating at all really can work.

- Part 2 and part 3 described how, when estimates are wanted, you should avoid asking people’s opinions wherever you can – using data is less stressful and more reliable.

- Part 4 and part 5 described how, when estimates are wanted and suitable data isn’t available, you can practice quantifying what you do know now, and find ways to make decisions even when your answers have wide ranges.

This post, part 6, is the last in the series. There’s a lot more techniques you could look into for estimation, but for this “what’s the least you can get away with” series, we’ve seen enough.

Today, we’ll cover some ideas to keep in mind no matter what estimation techniques you’re using. Hopefully these will help you get the most out of estimation, while putting the least time and effort into it.

Traps to be aware of

Again and again, organisations and individuals have as much information as they need to make a realistic prediction – and choose to ignore it. Let’s look at one version of this trap, which I’ve fallen for myself.

The sensible starting point: When estimating some new piece of work, you should look at “What have we done previously that seems at all similar to this new thing?” and use “How long did that take?” as the expectation for the new thing. Whether you have detailed task-by-task data, or an overall “x months” memory, that’s your starting value.

The trap: The new situation isn’t exactly the same as that previous one … so we think we can make adjustments to that starting value.

- We’ve learned from it, or have some more experienced team members, or know more about the domain: plan for x% reduction in a range of items.

- We’re using new technology (to build, or test, or write something for us)… which will avoid lots of what slowed us, and make us overall y% faster.

- The things we don’t have to do in this one mean z hours of work don’t need doing … and the new, different things are simple, only around w hours of work.

- Our new ways of working, new team/org structure, new risk management, etc …

Honestly, all of these things and many others probably will make some kind of difference. But it’s likely to be a lot less than you think – Fred Brooks’ 1986 “No silver bullet” paper discusses a long history of thinking tech or people changes will have a huge impact on productivity. Time and again we overestimate benefits, and gloss over some new complications.

Steve McConnell’s Software Estimation book gives the same warning: He’s explored lots of complex formulas that look impressive, and estimation frameworks with lots of “sliders and dials”, and concluded that these hurt much more often than they help. It’s very, very easy to stray out of “we can reasonably adjust this based on data” and into “we would like this undertaking to go well, and want to grab onto anything that might indicate that’s true”.

Perhaps my favourite example of people disregarding the evidence comes from the book Thinking, fast and slow.

It discusses a group of expert estimators, writing a textbook on good estimation practice. They were keen to know when their book might be finished. They used all their own best advice, looking at rate of progress so far and completion times for similarly-complex textbooks. The completion range this came up with was much, much longer than any of them wanted it to be. So, they applied adjustments: reasons they were over the slow parts now, process changes that would make final parts faster, and settled on a shorter estimate. Several years later, the book was eventually finished right when that first discarded prediction had thought it would be.

From this, and various similar pitfalls, the “planning fallacy” cognitive bias was coined. The book looks into reasons for it – it’s linked to lots of other biases – and at the root of it is an unreasonable level of optimism that’s probably been beneficial for humans to have overall. If we were more realistic about how unlikely some of our plans were to work, there’s surely all kinds of important things people would never have attempted.

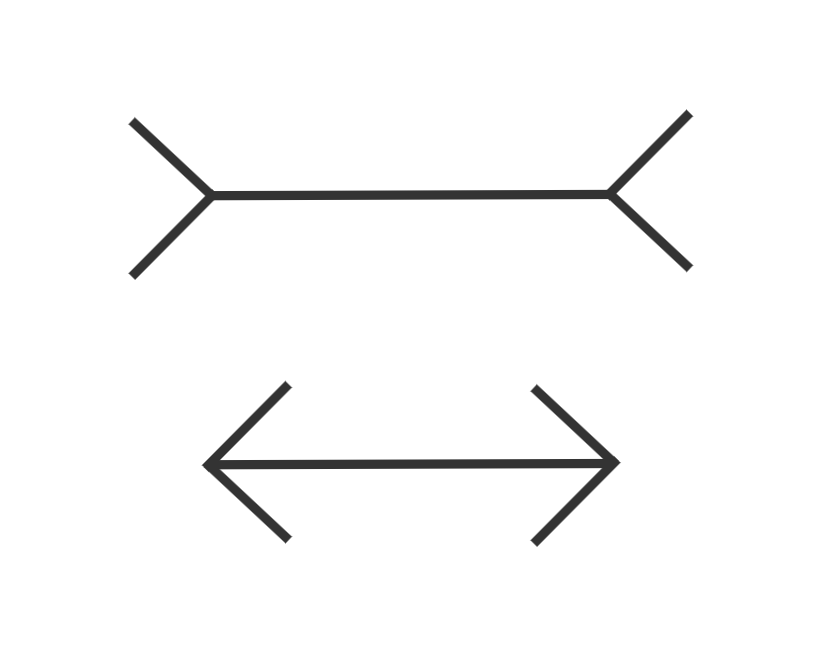

One thing this book really brought home for me: Knowing this type of bias exists doesn’t help at all in avoiding falling for it. The authors compare this to the Müller-Lyer illusion and other optical illusions: even once you’ve measured and convinced yourself that the horizontal lines are the same length, they still look different.

Knowing the trick doesn’t mean your eyes stop falling for it. All you can learn is: Know not to trust your judgement when you’re looking at lines that have alternating fins at the end. And similar lessons can be applied to all the cognitive biases. It’s not “I’ve read about the planning fallacy, so it won’t affect me.” Instead: “This is the kind of situation where the planning fallacy affects our judgement – so let’s not rely on judgement here.”

There’s lots of interesting stuff in this book – some of the more dramatic insights have been called into question as part of an overall “replication crisis” for all kinds of study (excellent chapter-by-chapter examination here) – but lots of it still stands. In particular, the anchoring bias described in part 5 of this series. It happens all the time, with numbers that don’t matter at all – the first number you think of strongly pulls your final answer towards it. How much stronger would that pull be with a number that you, or your boss, would really like the answer to fit in with?

So, what helps?

- Use whatever simple methods are available to compute the range for this estimate, based on what you know about similar work in the past (parts 1 to 5 of this blog post series are useful).

- There’s probably some differences about the work and how you’ll approach it that really will make some difference – but acknowledge, to yourself and others, that putting values on how much difference that will make to the time taken is just speculation.

- Keep a list of what these are, along with: We hope that x will make a substantial difference… we’ll know that’s happening when…

- It’s absolutely fine to add notes on how much difference you hope for, what will make you confident that’s coming to pass, and when you’ll be able to use it to revise the estimate. Re-forecast as more data comes available.

- It’s absolutely not fine to use these hoped-for gains now, at the up-front planning and decision making stage, to commit to plans, release dates, funding limits, or anything else. Hope is not a plan.

I’ve had some success with this; “We can’t know if x will have an impact” is a very different discussion than “We can’t know yet if x will have an impact, here’s a plan for when we can know that and how to incorporate that information.”

Painful discussions

With the best will in the world, with people aware of the limited ability to predict the future and helping each other to interpret data and avoid cognitive biases, estimation’s still a difficult subject. But in lots of situations the conversation’s much worse than that. Acknowledging what might make it painful is an important tool in getting to more useful answers.

Lots of people’s only experience of estimating is getting put on the spot, asked to use their expert judgement to give an answer. It can be seen as a moral failing (both by yourself, and by others) if you can’t make predictions that regularly match what happens in reality. Lots of people never get talked through the many traps that make prediction so hard, and assume that any competent professional should be able to do this. No wonder people feel stress and aversion to it.

More than that, estimates have a history of being misused: as an opening bid in a negotiation, or as a commitment that only the person giving answers will get held to and grilled about. In some cases, you really have to wonder why there’s a need is to ask the workers for an initial input at all, if other people are going to overrule it – and again, an impulse to back away and avoid saying anything can be strong.

You might not work somewhere that takes things to these extremes – but these feelings are all there, to some extent, even in honest and well-intentioned environments. Some things that help:

- Remember – and talk frequently about – responsible use of estimates. Any insights people can offer into “what we’re getting into” are to be appreciated, not used to go make ill-advised promises and then come back to complain about. Part 4 of this series had advice on sensible usage.

- Be comfortable with how little we can rely on any predictions – questions like “how long”, “how much value” and “should we do this at all” are all a similar class of question, and everyone involved in a piece of work can offer their own best insights on one of these. Part 5’s workshop is one way to try building this understanding, and there’s lots more you can do.

- When looking for different techniques or conversations, remember this advice from part 1: “In a high-trust environment, almost anything can work. In a low-trust environment, almost nothing will.” Work on that.

Actually getting comfortable with uncertainty

The idea that no matter how much effort or expertise we put in, the future will remain fairly unknowable, is something that lots of people just don’t want to accept. We sometimes talk about this issue as if it’s software industry specific: Other industries can predict just fine, so why can’t we? That’s really not the case! Berlin-Brandenburg Airport was my go-to example for a long time – its completion was replanned numerous times over many years, and got pushed back again and again. That’s open and running now, and joins a long list of overrunning examples (Scottish Parliament building, most of the Olympics, famous movies including Apocalypse Now…)

For all kinds of “what’s going to happen” questions, we’re drawn to simple, definite answers. There’s studies that show knowing what outcomes are coming – even bad ones – cause much less stress than dealing with uncertain ones. I feel that in some cases, people want simple answers so much that they’re willing to accept any that are offered, even when they know they’re likely wrong. This can put you in a difficult situation: the truth is that we can know something about the future, and being happy with that uncertain but still useful prediction is the best we can do. But there’s often people who will confidently expound definite dates and numbers, sounding much more informed and competent than your realistic assessments. What should you do then?

One thing to think about: understand what type of question is really getting asked. Sometimes it’s not “What is our most informed, realistic assessment of how long this will take?” Sometimes it’s more “What’s an answer that’s acceptable for us to move forward with, and we’ll deal with the consequences later?”

The book Future Babble: Why expert predictions fail and why we believe them anyway, by Dan Gardner, takes a look at this issue across all kinds of human endeavours. There’s lots of evidence of people wanting simple, confident answers – and of the fact that these frequently turn out to be wrong tends not to hurt these confident predictors’ reputation or careers. In one chapter, he describes it like playing a game of “Heads I win, tails you forget we made a bet.”

So what do you do about that?

- As ever: the Steve McConnell books (Software Estimation, Rapid Development) are packed with ideas about what misunderstandings or motivations might be happening. A recurring theme: there’s no point in being right if you can’t get anyone to listen to you. I wrote some notes on this in How to disappoint people, part 3.

- Understanding what game’s being played is important. If people around you aren’t actually asking for a good-faith, best-understanding estimate of how long something will actually take … you’re never going to succeed by trying to get better at producing that.

- If you’re in a cycle of people insisting on using simple, wrong answers and it never seeming to matter – can you find anyone who’s interested in finding a different way? I’ve tried to offer suggestions throughout this series on how you might get a useful conversation started. This really depends on the environment you’re working in; if people just don’t seem curious about understanding how to improve, this idea may not get taken up right now – but you can offer.

You can politely remind people that things don’t have to be this way: for lots of people, the only experience they’ve had is to take an uncertain estimate, make that the exact deadline, and go through stress and drama to see if they’re successful. The idea that we could try something different next time might be appealing.

– Part 4 of this series

Summing up

There’s a lot of choices about how to estimate in this series, and I’ve been deliberately looking at the minimal ones – there’s a whole world of other things you could do too. I’ve sometimes felt I need to get this “right” first time with a new team or new org: If I agree to too much process now, I’ll be stuck with it forever.

An idea I try to keep in mind is that we can keep changing things. I don’t need to wait until we change lots about how we talk to each other, and how we think about work at all, so we can do the minimal approach I’d like. We can put in place more structure for now, and take it away again once it’s no longer needed.

Ann Pendleton-Jullian and Dave Snowden have done lots of work on describing different types of scaffold, and how you might think about using them – it’s a metaphor with lots of potential. This overview describes 5 types of scaffolding, and a blog post from Sonja Blignaut expands on how these might be applied.

For us, thinking about estimation: there’s a lot you might need to put in place before you can move to the type of approach you’d like. With scaffolding in place, you get to decide what’s the next step you’d like to make towards that. And as we’ve discussed in various parts of this series, the change might have little to do with estimation skills at all. What changes could you make, so that being “good” at estimation becomes less important?