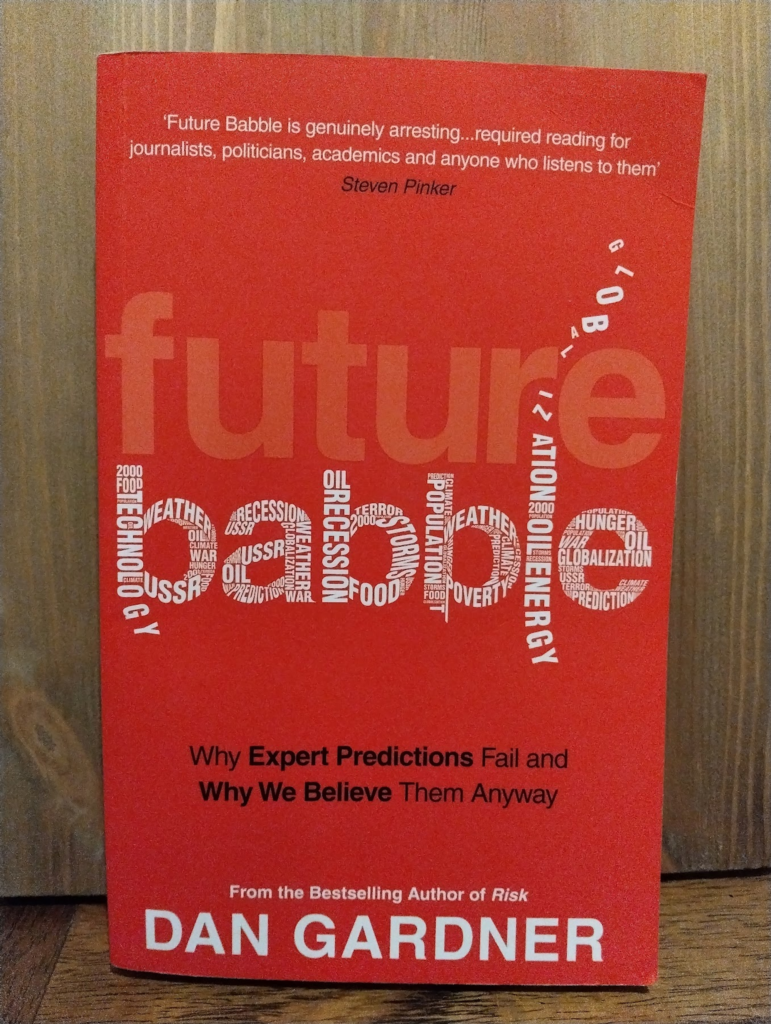

I read “Future babble: Why expert predictions fail and why we believe them anyway” (Dan Gardner, 2010) when I was looking into a series of blog posts on “Minimum Viable Estimation”. There’s sometimes a view that the software industry in particular has problems with estimation – but this book has lots of evidence that predicting what’s going to happen with this is a real struggle in all fields of human endeavour.

In that series, I mentioned one interesting aspect from the book:

There’s lots of evidence of people wanting simple, confident answers – and of the fact that these frequently turn out to be wrong tends not to hurt these confident predictors’ reputation or careers. In one chapter, he describes it like playing a game of “Heads I win, tails you forget we made a bet.”

– Minimum Viable Estimation, part 6

There’s plenty of other stuff in the book worth mentioning, let’s take a look.

A mountain of examples

The book’s full of examples of where people or whole industries have invested lots of effort into making predictions, and done very poorly. The examples come from academic studies, from history, and from various interviews the author’s done himself.

It starts with a tour of failed predictions though the whole 20th century – we were completely surprised by every single major development. But people still eagerly listen to expert predictions.

We try to eliminate uncertainty however we can. We see patterns where there are none. We treat random results as if they are meaningful. And we treasure stories that replace the complexity and uncertainty of reality with simple narratives about what’s happening and what will happen.

– Future babble, chapter 1

As a specific example: Predicting future oil prices is important, and companies are willing to invest lots in anything that might help with it. Experts have been forecasting the price of oil constantly since the 19th century, but nobody’s ever done well at it. In 2007, economists Ron Alquist and Lutz Kilian examined all the sophisticated methods for predicting the price of oil one month, one quarter, or one year in the future. “Predict no change” does better than any of them.

In interviews, the author found lots of senior people involved in oil prediction were well aware of its performance. Lord John Browne, former CEO of BP: “I can predict confidently that it will vary. After that, I can gossip with you.”

Still, the oil forecasting industry keeps growing because lots of people are prepared to pay for something top oil executives consider worthless. “There’s a demand for the forecasts so people generate them,” Schwartz [high-level consultant] says with a shrug.

– Future babble, chapter 2

It’s not that everything is impossible to predict. The book talks about different difficulties:

- Some systems are linear and very predictable far into the future, like the motion of the planets; lots of people assume we can predict everything in the same way.

- Others are chaotic, like the weather – immeasurably small differences in the current state lead to huge differences in future. We can predict weather OK for a few days ahead, and then we’re guessing.

- Oil forecasting depends on human choice, technological factors, and lots more – looks impossible to get a good method for forecasting.

This is all very familiar – I wrote recently about Tiani Jones’ QCon talk that covered bounded applicability, socio-technical systems, and lots more from Cynefin and other complexity topics.

Are we measurably bad at this?

The examples throughout the book – specific and big-picture – present a compelling story about how bad we are at prediction. But it might be just as easy to pick different examples and present a story of people doing great. I was pleased the book looked at how we can objectively quantify things.

It discusses how difficult it is to quantify whether experts are “bad” at predicting – there are lots of big failures, but how many times are people correct, overall? And how do you deal with “vague”, arguable results?

The author proposes using calibration measurements in the style of How to measure anything (I’m a big fan of that book, and wrote about its calibration method): for a large collection of clearly-defined predictions, when an expert says they are 70 percent confident, did it happen around 70 percent of the time?

Along with calibration, we need “discrimination” – someone who’s frequently confident in their predictions (giving confidences like 90% is much more useful than lots of 50% ones).

Other measures: can the expert beat simple rules like “predict no change” (that is, yesterday’s weather)? Do long-term predictions (several years) come true?

Several chapters cover Dr Philip Tetlock’s research with this kind of measurement. I’ve heard of Tetlock, he’s written several books about prediction, but I haven’t read his work or got a sense of how well-regarded it is in general. The Wikipedia summary of his “Good judgement project” sounds like it was well thought through and had decisive outcomes. From experiments with hundreds of experts over many years, Dr Tetlock has found most experts have very poor calibration and discrimination: they don’t beat random predictions, and do worse than using the “predict no change” rule.

However, experts who accept the world is uncertain, doubt they have the right answers, and readjust when they get new information, do far better than those who confidently predict something and stick to it. From an ancient Greek poem by Archilochus: “The fox knows many things, but the hedgehog knows one big thing”, Tetlock named these two types of expert foxes and hedgehogs.

In the complex, hard-to-predict fields we’re often interested in, there’s nobody who can give reliable long-term forecasts; but in the nearer term (sometimes up to 12 months ahead), with caveats and limited confidence, foxes do far better at identifying what can be known.

Drawn towards hedgehogs

“Hedgehog” thinking (one big idea) is common, and the book covers lots of reasons for that:

- We like thinking we can predict the future, and thinking we can spot patterns and tell the story of next steps is appealing. This can be worse for fields you’re an expert in – huge range of data you can give more or less weight to, and stories you can craft to make things fit. References the book Thinking, fast and slow for examples of anchoring, availability heuristic, representativeness and more.

- Being wrong, repeatedly, tends not to hurt reputations; several examples of authors and media personalities who made the same confident but wrong prediction about world events repeatedly, over decades. Some people will point out you’re where you were wrong but you can get rich and famous by not listening to them – that’s got to be a motivator to stick to your big idea, right?

- If someone’s confidence in their prediction is high, people tend to believe they’re probably right; in any situation where you want to be listened to, be seen as knowledgeable, gain respect, there’ll be a draw towards expressing things with certainty.

Various studies suggest there’s a “confidence heuristic” at work. People tend to think that predictors who seem absolutely certain are good forecasters, while those who use phrases like “it is probable this will happen but not certain” are less competent.

People “took such judgements as indicators that the forecasters were either generally incompetent, ignorant of the facts in a given case, or lazy, unwilling to expend the effort required to gather information that would justify greater confidence” (Yates, Price, Lee, Ramirez, Good Probabilistic Forecasters: The ‘Consumer’s Perspective’, International Journal of Forecasting, 1996)

– Future babble, chapter 6

Like other heuristics, this isn’t a conscious “they sound sure of themselves, they must be right” decision – it happens automatically, meaning we don’t get an easy chance to realise what faulty reasoning we’re using.

A summary of this summary

There’s lots more in the book – have a read if this writeup has interested you!

Some conclusions I took from the book:

- Prediction, for lots of topics, is hard to do with any level of certainty — but you can get useful probabilistic forecasts, and make them more useful by updating them as new information comes in.

- Despite this “foxlike” approach being the most accurate and useful, it’s often not what people are looking for — confident but probably wrong predictions might be more appreciated. They’re easier to produce, often have little negative outcome for you when you’re wrong, and if you happen to get one right you’ll be lauded.

- This is very much not just a software industry thing, or a politics thing, or anything else — across all kinds of industry and fields of study, we’re very poor at predicting and prefer not to face up to that fact.