In past instalments, this series has introduced the topic, dug into OKR origins, checked what else was going on while companies used them, and given ideas on a few things it’s easy to miss.

Today, we’ll be talking about one big thing that people might not have told you about OKRs:

Honestly, you don’t need them at all.

Every process we add to our ways of working takes time and adds complexity. Sometimes that’s needed – but I think that for everything you do, you need to make sure it carries its own weight. Does it bring you enough benefit to justify the overhead it’s adding? What do you gain from adding in these extra meetings, tracking, extra things that need your attention? And how much could you strip it back and still be able to operate?

These questions are at the heart of where lots of agile ideas came from – instead of huge requirements documents and test plans, extensive progress tracking meetings and reports, you can cover all the “what are we building and how is it going” questions with big visible charts and regular short conversations. For most kinds of work, something to answer those questions and help steer direction is needed, and this feels like a good minimal answer.

What about the kinds of question OKRs help with? What’s the least you could do there, and still have a shared understanding of progress?

I think the answer is: you could do nothing at all. When I first tried OKRs, they were a new-ish idea to lots of people, and most teams I worked with had no quarterly goals tracking at all. In the 10 years since then they’ve become pretty ubiquitous across the tech industry, and started to feel inevitable. If OKRs aren’t working for you, the questions are often about how you can make them work better, or what different framework might do a better quarterly goal tracking job.

We know how to do work without putting OKRs or anything similar around it – I did that for years before ever hearing of OKRs, and lots of frameworks (XP, Scrum and others) never mentioned it. I do agree that it can feel like medium-term direction is missing – beyond the scale of “what should we aim for this week / fortnight”, it can feel hard to pick among the many possible options. John Cutler calls this the “messy middle” range. I think the issue there is often a lack of concrete strategy – some “just specific enough” guidance to help make decisions about what work to do to move in the right direction.

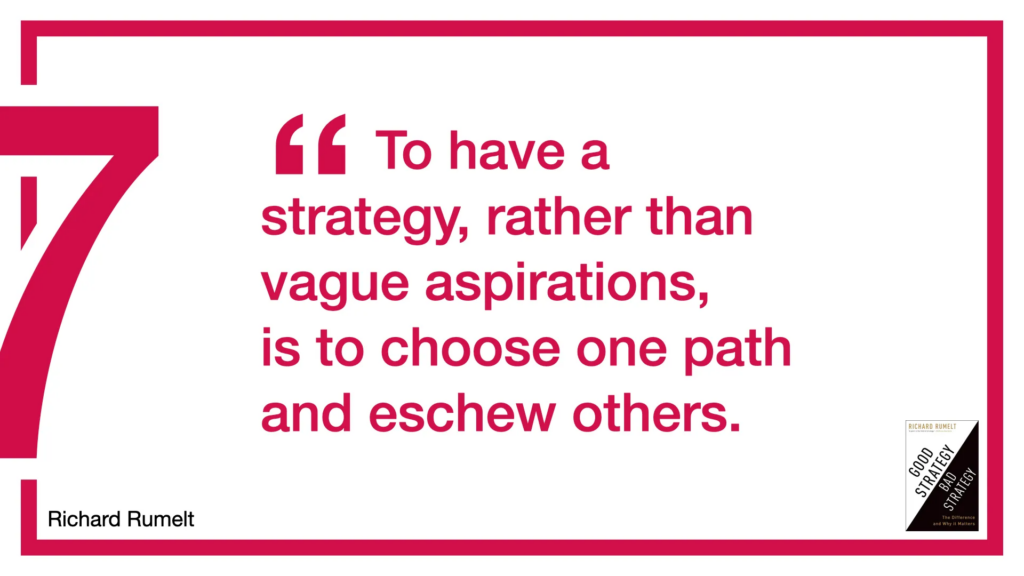

“Strategy” gets talked about a lot, and I’m not sure there’s any agreed-upon standard definition of what one looks like – but a book I really appreciated the advice from was “Good strategy, bad strategy” by Richard Rumelt (summary here).

As much as picking “good things to do”, Rumelt stresses the importance of deciding on the things you’re not going to be doing. This is the hard part: “There is difficult psychological, political and organizational work in saying ‘no’ to whole worlds of hopes, dreams, and aspirations.”

He suggests a framework for describing “the kernel” of your strategy – this has 3 sections:

- A diagnosis: defines the challenge your business/product faces. There’s no “right” answer to what the challenge is – you can agree which of the many aspects of your situation seem critical. Simplify the real, complex reality into an easier-to-understand story.

- A guiding policy: the overall approach you’re choosing to cope with this challenge. This isn’t about exact actions, but it does constrain the kinds of actions you’ll take – what direction have you agreed is a sensible one to move in? And therefore, which other directions are you going to leave unexplored for now?

- A set of coherent actions: describing how that policy will be carried out. These should work meaningfully together, rather than a grab-bag of unrelated (or conflicting) actions.

I’m far from an expert on strategy, but I’ve found this “kernel” process very useful: it helps you pick a course of action now, and gives obvious answers to the huge variety of possible ideas that come up over time. It’s very clear that you’re picking one possible story and route through the overwhelmingly complex space of all the things you could choose to focus on – and if that turns out to be a poor choice, you can change to another one later.

With a well-described strategy in place, there’s both less need for OKRs at all (those weekly/fortnightly “what should we do” questions have a guiding direction to answer them), and it’s easier to set OKRs (rather than “we could do anything” every three months, you have a constrained idea of what kinds of work you might do, and the OKRs can be more about putting timeboxes and measures on that bigger picture).

In lots of teams, a strategy like this is missing – and it’s important to realise that OKRs do nothing to help fill that gap. Without an overall strategy, OKRs just move that weekly/fortnightly “we could do anything, what’ll we pick” question up to the quarterly cadence. Adrian Howard describes this well:

Just like writing “as a user” on all your user stories does not make you a user centric company, writing OKRs does not magically give you a strategy and vision.

So, what are they good for?

None of this is to say that there’s no point using OKRs at all. Acknowledging that you can do without them completely, and that there’s inevitable overhead in setting, tracking and communicating them, prompts you to keep thinking: Is there anything we can gain from using OKRs, that justifies their cost?

I do like the idea of timeboxes as a forcing function. This is a big part of what made Scrum and other agile methods so useful when first introduced. At the time, releasing to production was a huge effort for lots of software products – weeks or months of integrating, testing, amending. Often this was so difficult people left it for a year or more between each release. Imagine the shock of Scrum saying not just that this should be done “more often”, but specifying that every sprint – every 4 weeks – a complete, tested running version would be put into production? Some orgs couldn’t even run their regression tests in 4 weeks. That was a useful constraint to look seriously at what would make testing and deployment fast, repeatable, and automated – in addition to looking again at the smallest unit of “useful” functionality they could add in a single release.

Over time, this initially unbelievable pace of 4-weekly releases has come to seem slow – the “10+ deploys per day” talk from Flickr in 2009 was another step in the industry-wide realisation of what’s possible. If you haven’t looked much into the DORA metrics, you really should; lots of insights into why this stuff matters. If you haven’t focused on making frequent releases painless and low-overhead, it almost doesn’t matter how good your strategy or creative ideas are: there’s too much work involved in getting any of them into the hands of users.

OKRs can be used in a similar way, moving one step up from fast and reliable deployments. When I first tried OKRs, the challenge of doing this inside of 3 months sounded daunting:

- picking a user-facing metric,

- coming up with ideas to improve it,

- getting one of those ideas into production,

- understanding whether it had moved the metric,

- then picking a different idea and trying that if the first one didn’t work

If doing that with a range different OKRs, every 3 months, sounds difficult where you work, this could be a useful prompt to look at why. Who decides what ideas to try? How often do you get qualitative and quantitative feedback from real users? Improving these can be important if you want to find out which of your ideas are any good.

Another good use of OKRs: coordinating with other teams in a large organisation. For one OKR, we focused on retiring lots of old services and getting out of older technologies, putting us in a good place to move faster in future. This meant asking several other teams to do work so we could get disentangled. Like politicians thinking about spending “political capital”, choosing this focus meant making a list of people we needed to pester and persuade now, knowing we’d be able to thank them and be out of their hair after these few short tasks. The self-imposed deadline of our OKR was a good enough reason to go persuade them that the time to do this was now. If you need to keep coordinating with everyone, all the time, it’ll be hard to keep moving.

Similarly, when a new initiative needs another team to work with yours, asking them to make it one of their (few, important) OKRs can be a way to test whether they’re seriously committing to help. In large orgs with lots of stakeholders it can be easy for teams to say “yes” to everyone, meaning we might find ourselves competing for their time. At OKR-setting time, I’ve told people that if they can’t make this shared work their main, visible objective, then we’ll come back another time when it can be.

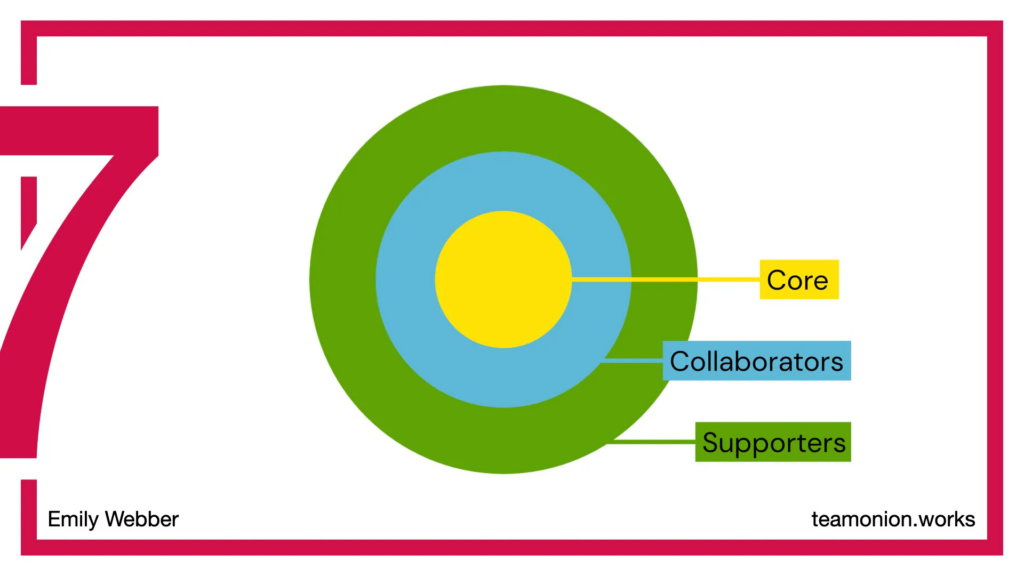

In all of these situations, we were thinking about which teams would have to do lots of work with us for this OKR and which we’d be able to leave on the sidelines. I didn’t have the excellent Team Onion model from Emily Webber for most of that time, but once I found it I recognised it’s perfect for this kind of thing.

Useful, visual categories for who’ll need to be involved if you choose different objectives can help discussions – in a large org, there’s rarely anything a team can achieve completely alone. Agreeing shared responsibility helps everyone understand what’ll be expected of them, for this next few months.

So, about these 10 things…

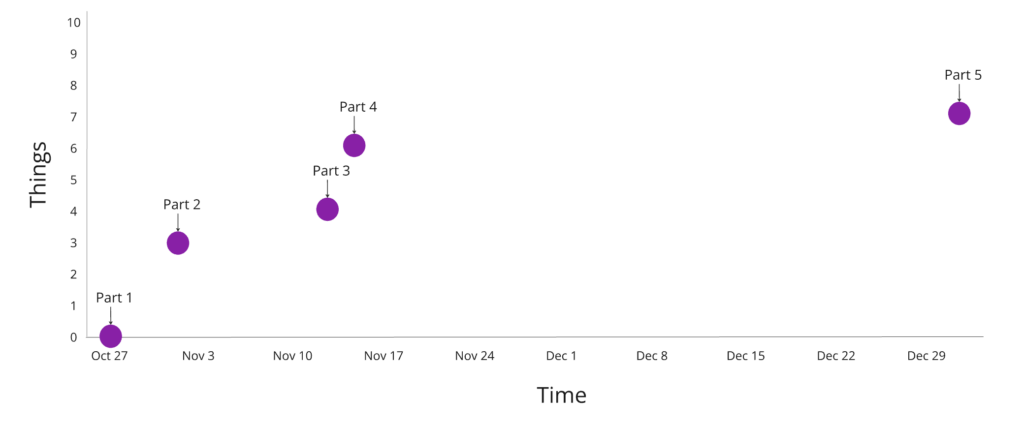

We’re making progress! But, on my arbitrary “10 things by the end of the quarter” objective, it’s mixed news. The graph was looking good back in October and November, but we’ve had a long pause before this post appeared.

A classic case of overconfidence and poor planning: I was making good progress so felt OK about pausing to write 2 other blog posts (about messing about with TypeScript and the meetup I co-organise). Then, I was sick for a week (not planned but these things do happen), and got better just in time for Advent of Code to take up all my attention for most of December (entirely predictable).

Following the rules of OKRs, however, I think I’ve managed to save the quarter. This post’s getting published on 31 December, and it takes us to the “this counts as success” magic 7 out of 10 result. Wonderful! Last 3 points will come in the New Year.

Update: Part 6 of this series, out now! Taking us up to thing number 9 out of 10.